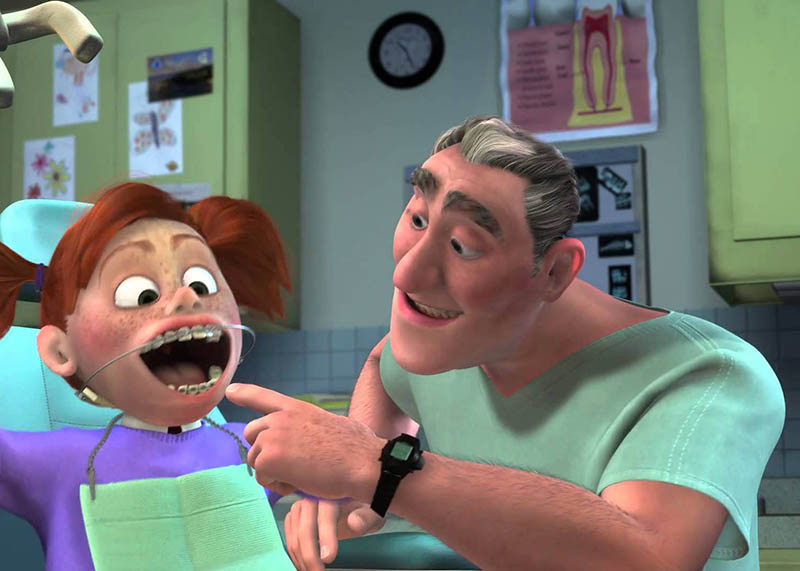

Why Hollywood Needs to Stop Hating on Root Canals

Root canals have a bad reputation they don't deserve and we think Hollywood is to blame. Movies and television shows often portray root canal therapy as a painful and frightening procedure. The truth…